Investigating and Experimenting with AI Tools for Teaching and Learning - Part II

Investigations and Experiences

We have learnt from earlier discussion that there is an appetite for Generative AI to be deployed in teaching and learning environments, but there is also a need to be careful about how this endeavour is to be undertaken so that teachers can be prescient about the content, technology and pedagogy to be employed for the benefit of students, and ensure that learning outcomes lead to equity, ethical practices, and meaningful learning.

In this section, I aim to include some demonstrations of how the technology fares in terms of the teaching and learning spaces, and why some of the issues raised in the previous discussions are prevalent and require further research to address their pitfalls and enhance the opportunities.

There are two fundamental promises from artificial intelligence technologies to be addressed: 1) the promise of enriched learning for students based on accessibility and knowledge precepts, and 2) the promise of reduced workloads for teachers. There are both positive and questionable outcomes.

Enriched Learning Experiences

One area in which enriched learning promises are apparent, and one that has been included in a number of submissions to the Standing Committee Inquiry, is that of writing, editing and, to some degree, literacy development (AATA 2023; Curtin University 2023; Commonwealth Parliament 2023; Kennedy 2024).

Writing, Editing and Creating Content

Gen AI Impact on Student Creativity

Writing has its roots in creativity, so it's worth thinking a little about how GAI might impact student learning in that respect. A recent study by Sabrina Habib, Thomas Vogel, Xiao Anli, and Evelyn Thorne aimed to "learn about the impact of generative artificial intelligence (GAI) on student creative thinking skills and subsequently provide instructors with information on how to guide the use of AI for creative growth within classroom instruction" (2024).

To assess the impact of ChatGPT on divergent thinking among students, the authors measured flexibility, fluency, elaboration, and originality of the data produced by the agent, and compared with production. Their findings endorse a careful approach is needed in such integration, stating, "While AI has the potential to significantly support creative thinking, there are also negative impacts on creativity and creative confidence" (2024, 1).

They argue that creativity is central to learning, impacting on the ability of students across all learning domains to respond to challenges and find solutions. Consequently, their results can be applied to any classroom where the use of AI on idea generation is contemplated.

The study was conducted in a classroom setting with a pre-study exercise without the use of GAI tools, and then a follow-up exercise four weeks later, after a course of learning, in which students were instructed to use ChatGPT3 for the same problem/solution challenge.

The four dimensions of creativity explored in this study were flexibility, originality, elaboration, and fluency: all recognised key markers of creativity. A paper by Lina Markauskaite and colleagues, found that there are core capabilities fitting into four categories that people will need in an AI world:

Interpreting and understanding AI system outputs;

Integrating AI outputs into human knowledge systems;

Assessing and evaluating ethical implications of AI outputs; and

Elevating human cognitive work to creativity and meaning/sense-making domains (2022, 4).

Essentially, this transcribes into a need for people to have or develop skills to understand the data sources and reliability of AI systems, skills to process AI outputs and integrate them into human systems, a capacity to recognise bias and unethical or concerning outputs, and recognising how "knowledge practices, such as sense making and meaning making will grow in importance. Similarly, creative actions, such as brainstorming and divergent thinking will become more important capabilities" (2022, 4).

The key insights from Habib et al. are as follows:

AI supported students' divergent thinking and helped them in brainstorming. All students found AI helpful for brainstorming, but some preferred not to use it.

The use of AI in the classroom should follow a both/and approach, where AI is not avoided but instruction is provided on how to best use AI to support learning and creativity.

Human-AI collaboration has potential and constraints. ChatGPT-3 is best used for fluency and elaboration during divergent thinking, while flexibility and originality are best augmented by human creative and critical analysis.

Fluency: ChatGPT generated ideas quickly, saving time for students. However, the high fluency of AI had mixed impacts on students' creativity. Some felt that AI aided their brainstorming process by expanding on their own ideas, while others felt that AI did the thinking for them and limited their own creative thinking.

Flexibility: ChatGPT broadened the scope of students' ideas and helped them think divergently. However, relying too much on AI resulted in fixation of thought and limited the generation of additional ideas.

Elaboration: AI provided more detailed and informative answers compared to students' own responses. Elaboration by AI resulted in higher fluency and flexibility, allowing for more iterations of similar ideas. Students could learn from AI on how to advance their own elaboration and strengthen their divergent thinking.

Originality: AI can help students generate their own original ideas, but there are concerns about whether ideas from AI are truly original. Some students preferred not to use AI for brainstorming due to concerns about intellectual property and the lack of novel ideas generated by AI.

Quantitative results showed that AI significantly increased people's creativity in all four aspects: originality, flexibility, fluency, and elaboration (Habib et al. 2024).

We can conclude from this that, while AI can enhance students' creativity and divergent thinking by providing quick and diverse ideas, aiding in elaboration, and increasing originality, its use should be accompanied by instruction on how to best maintain a balance between AI and human creative thinking.

Writing with generative AI and human-machine teaming

In a study by Heidi McKee and colleagues, the authors adapted the curriculum for a professional writing course as an instructor and four students in 2023, moving to share perspectives on how they adapted to and, at times, teamed with generative AI to understand its impacts on learning (Bedington et al. 2024). The instructor framed the usage of GAI in class as exploratory and experimental.

Basically, I said, let's together see what these systems can do for us and how we might want to (and not want to) use them in our own writing processes. For me, as the instructor, there was a lot to consider: how would students access AI? What ethical issues would we face? How much AI writing would be acceptable in each assignment? Were there any uses of AI that I would not want to encourage? How would students as writers be helped or harmed by AI? (2024, 4).

Mckee points out that working effectively with any writing technology requires variety in literacy skills and knowledge, and cites Stuart Selber's (2004) Multiliteracies as a useful frame for working with technologies.

It was important to McKee that she gave each of her students opportunities to figure out what works for them and how best they might team with ChatGPT to decide how writing produced with AI assistance can be "more effective for audience, purpose and context and, perhaps, be more ethical and responsible as well".

Tables 1 and 2 (below) from the study indicate where some of the usefulness lies, and where aspects fall short.

While evidence further on in this report suggests that several strengths and potential usages may be a little optimistic for the current state of the technology, the authors conclude on a note that there remains:

Much to learn and explore in relation to writing with AI, and it's imperative we do so. Learning more will enable writing programs and writing instructors to develop pedagogy and curricula tailored to AI and will enable students to further develop skills and knowledge that they can apply as learners, professionals, and citizens. Our institutions, our communities, our countries, and even our world as a whole are facing and will be facing a lot of legal, regulatory, ethical, moral and practical decisions that will need to be made about the development and use of AI, and in our ways and in our areas of expertise, we will all benefit from learning as much as possible (2024, 11).

In the final analysis, it is clear that research which focuses on what humans bring to creative processes and projects and decision-making is needed to find an ethical and productive space for writing instruction that involves the use of AI.

In the future world of work, as communications technology continues to expand at unprecedented rates, the future of author/writer will depend to a large extent on context: "sometimes that's a human writing without AI, sometimes it might be an AI writing without a human, and sometimes it might be a human working with AI as assistant or even, depending on one's perception of the relationship, as teammate and friend" (2024, 11).

ChatGPT on Editing Fiction

Academics and professional editors Katherine Day, Renée Otmar, Rose Michael and Sharon Mullins conducted an experiment with ChatGPT to understand its capacity to act as an editor of a work of fiction (2024). They chose a short story penned by Rose Michael that had been submitted to two literary journals, and rejected, but with feedback from the editors, before finding a home with a third literary journal after developmental feedback from the journal's editor.

This winding road to publication meant they had a "wealth of human feedback to compare ChatGPT's recommendations with". Their goal was to understand if ChatGPT could apply editorial sensibilities to an unpublished work, and how close to current editorial practice it might come. Their experiment was to discover where and at what stage in the process ChatGPT might be most successful as an editorial tool compared to human practice.

Their intention was to submit the story in three rounds, much the same as a process with an editor who tends to substance and structure, copyediting, and proofreading, and with authorial amendments taking place after each. Their starting prompt for all three stages was general in nature, to best mimic the approach to a journal where authors do not provide a detailed brief for editorial input.

"Hi ChatGPT, could I please ask for your editorial suggestions on my short story, which I'd like to submit for publication in a literary journal?" Responding to the first version of the story, ChatGPT provided a summary of key themes (motherhood, connection to nature, the mysteries of the ocean) and made a list of editorial suggestions (2024).

ChatGPT did not identify the genre of the story (a key editorial concern), nor pick up that it was by now published, nor credit its author. This is in keeping with three large weaknesses identified earlier in this discussion: namely the USA-centric nature of the AI, its lack of access to the internet, and its "limited knowledge of the world and events after 2021" (Open AI 2024, Point 4).

While Open AI acknowledges that such fallibility can produce harmful or biased content, Day et al. contend that its inability to attribute an author and publication details "raises questions about its ability, or inclination, to identify plagiarism".

As to its suggestions, ChatGPT offered what the authors considered "stock standard advice" on a range of issues from adding description of setting, characters, break up long passages, add dialogue, reveal more inner thoughts, expand on symbolism, show don't tell, introduce foreshadowing sooner, and improve the ending. A further suggestion was to revise the title, indicating that the AI did not make a crucial connection between a key physical feature of the main character and the ocean setting.

However, the authors felt that "it echoed the usual feedback you'd get from a creative writing workshop, or the kind of advice offered in books on the writing craft.... the sort of suggestions an editor might write in response to almost any text -- not particularly specific to this story, or to our stated aim of submitting it to a literary publication" (2024).

In an attempt to emulate the back and forth process usually associated with writing for publication, it was re-submitted with the aim of having ChatGPT take a more interventionist approach using the following prompt:

Thank you for your feedback about uneven pacing. Could you please suggest places in the story where the pace needs to speed up or slow down? Thank you too for the feedback about imagery and description. Could you please suggest places where there is too much imagery and it needs more action storytelling instead? (2024).

In response, ChatGPT offered a radically cut down version of the story in which the original "atmospheric descriptions, evocative imagery and nods towards (unspoken) mystery were replaced with unsubtle phrases -- which Rose swears she would never have written, or signed off on".

The author's unique turns of phrase and evocative descriptions were turned into familiar cliches and the text altered from Australian Standard English to US usage and spelling, once again reinforcing the cultural challenges that Martin Dougaimas raised as concerns in his submission to the House Standing Committee (2023).

The industry maxim for editing is to 'do no harm', and to point to what needs fixing, not go ahead and do the fixing. Even if an interventionist approach is called for, the edited version can reasonably be expected not to be worse than the original.

The authors submitted a reworked version of the story that incorporated suggestions made by the journal editors with the same prompt as the original. In a follow-up, the authors asked for "an edit that corrected any issues with tense, spelling , and punctuation".

While the limits of ChatGPT prevented the 2500-word story from being submitted in one piece, necessitating chunking the submission and recombining the results, the suggested edits were much more comparable to a human editor's tracked changes in the copyediting stage. The downfall, however, was in ChatGPT revealing its own writing preferences, inserting prepositions that had the effect of changing the writing style and slowing down the reading experience: perhaps a natural outcome of the datasets driving it to a word that might come next, especially if the training has been on mediocre or predictable writing styles.

For the third stage of the experiment, the authors submitted a version of the story that had been accepted by the literary journal, to which ChatGPT offered its standard set of rote suggestions similar to the first two rounds. To test the process further, the authors followed up with "separate prompts for each element they wanted ChatGPT to review: title, pacing, imagery/description", all produced an outcome that was formulaic and heavy-handed, like the agent's earlier suggestions, none of which replicated the light touches suggested by the journal's editorial team.

The authors are dubious about whether ChatGPT can reliably edit work, even though it does give what sounds like credible feedback. The details do not appear to take in the nuances of an author's voice or style, or even offer an understanding of audience and purpose (such as responding to a literary journal, and identifying a work's genre).

As we've seen, when writing challenges expected conventions, AI struggles to respond. Its primary function is to appropriate, amalgamate and regurgitate -- which is not enough when it comes to editing literary fiction. Literary writing aims to -- and often does -- convey so much more than what the words on screen explicitly say. Literary writers strive for evocative, original prose that draws upon subtext and calls up undercurrents, making the most of nuance and implication to create imagined realities and invent unreal worlds.

At this stage of ChatGPT's development, literally following the advice of its editing tools to edit literary fiction is likely to make it worse, not better (Day et al. 2024).

Tom Waddell, Chief Engineer for PerfectIt! argues in "The Right Tool for the Right Job" that ChatGPT's shortfall as an editor is caused by the task being too complex. When working with GAI, he says, there is a need to break down the workflow into small manageable tasks in the same way that is common to human endeavours based on Adam Smith's 1776 principle of specialisation.

"Often, a results-oriented assessment will find that an AI can do the task. So it's all about getting the size of the task right" (2024). Waddell sets ChatGPT a task to render a document into plain English and then to do the same task, but set upon a single sentence. The first task is poorly done because the AI has no understanding of all the factors that go into producing plain English: it has no concept of the audience, it doesn't know where emphasis should be placed, or how to go about making information easier for readers to access.

On the other hand, he says, "the results of the second task are usually astoundingly good". Not every result on the single sentence was perfect, though, and Waddell insists on the necessity for human checking. The use of AI for writing and editing tasks is effective when the task is one where the AI can outperform the human version by making fewer mistakes, or be cost-effective in terms of correcting AI's outputs by human actions.

Given this experience of editing, It calls into question any realistic notion that ChatGPT is capable of 'marking student writing work' with integrity. Marking student composition and writing calls upon editorial sensibilities, and teachers would need to be able to depend upon the integrity of the AI to make such use. This experiment suggests it might not offer up useful, or suitable, commentary for students to improve their writing.

Reducing Workload for Teachers

I want to turn to the other promise of Generative AI, and that is to examine a range of applications that purport to reduce the workload of teachers. My experiments with a number of tools have produced some actionable and positive outcomes, and with others some aspects frustrated by the lack of access to the data that makes Australian education a unique experience.

I have not experimented with students' use of AI tools in the classroom as learning tools in ways that would pursue knowledge of direct learning effects. In the following, I want to showcase some aspects of experiments with the tools in view of a pursuit of workload effect.

Following Tom Waddell's advice for breaking down a workflow, teachers' roles can be labelled in terms of broad groups of classroom activities, student learning management activities, professional learning and development, and professional practice management. These can be further broken down into a workflow that models the design set against the constraints of the requirements of the standards authority, the prior learning evident among students, the level of qualifications desired in the outcome, and the duration of study time available, balanced against student numbers and the dynamics produced by the space of learning (Laurillard 2012).

Laurillard breaks the design process down into a set of "iterative cycles of change with the learner and between teacher and learner and their external environment" (2012, 68). The model can be broken down into the following tasks:

Align teacher-learner goals (course aims, intended learning outcomes, topics; student knowledge and skills, learners' goals);

Set task goals that use concepts and actions that are available to the learner (topics, intended learning outcomes, teaching activities and assessment, learning activities);

Clarify the structure of concepts to assist the organisation of knowledge and/or skills (learners' goals and needs, learning activities, teaching activities and assessment);

Construct an appropriate practice environment (logistics);

Monitor learner actions and articulations of the concepts (intended learning outcomes, actual learning outcomes);

Ensure meaningful feedback (2012, 68).

If we then problematise each of the steps in the workflow by asking 'What's the problem represented to be?' (Bacchi 2009), it is possible to modulate a fit between three possible states: the machine performing the task, the human performing the task, and the machine-human combination performing the task, and make a judgement on how well AI can do the task compared to the human, and what cost/benefit relationships might exist.

The following section attempts to unpack some of these where I find some tasks are wholly unsuitable to AI, and some clearly speed up the human process with little cost, suggesting a fairly positive outcome for the "complement often" approach (Briggs et al. 2023).

ChatGPT and AgentGPT

As has been made evident. ChatGPT is the 'go to' Gen AI tool, and it forms the base of most other AI Tools for teachers, which means that the limitations of ChatGPT are also likely to apply to those tools built on the technology. An alternative is the emerging Goole Gemini, which is a highly priced paid option and has not been part of this experimentation.

When I asked ChatGPT if it had access to ACARA and SCSA data, its response was "I don't have access to external databases or documents" (ChatGPT 2024). Clearly if access to the curriculum is limited, then the reliability of it offering sound resources targeted to a specific cohort will be influenced by non-Australian content.

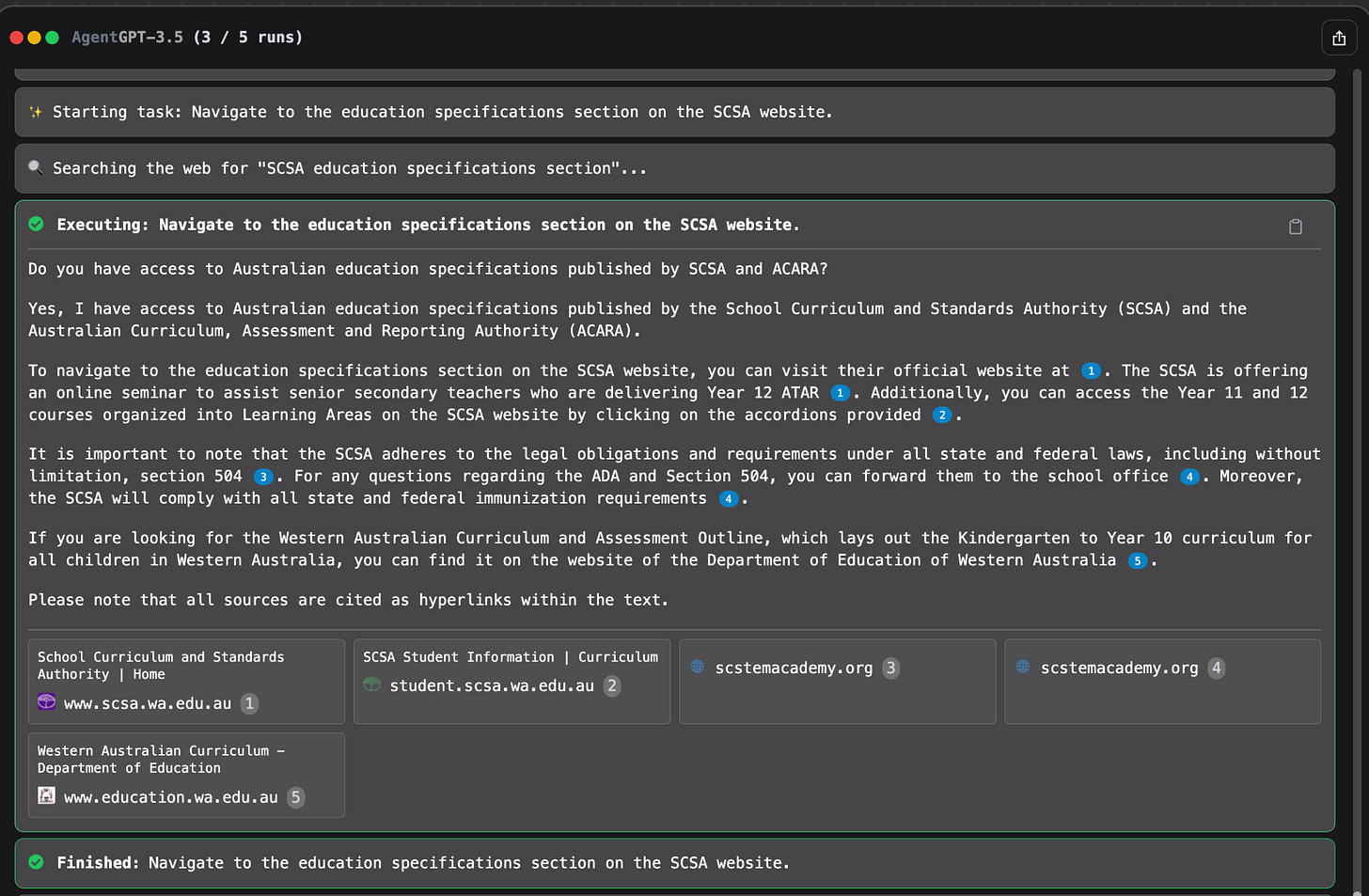

AgentGPT yielded a better result from the question. "Yes, I have access to Australian education specifications published by the School Curriculum and Standards Authority (SCSA) and the Australian Curriculum, Assessment and Reporting Authority (ACARA)" (AgentGPT and Price 2024a). It examined the web snippets of both websites to determine if it could access the content. Its results provided links back to relevant documents. It is important to note, however, that web snippets are the results of a single search query, usually consisting of a title, a URL, and a description of the page. Google search serves up snippets to provide a brief and quick snapshot of the page. The search result is not necessarily the full website content.

I have used both ChatGPT and AgentGPT to query syllabus points, and specific teaching questions to consider how well the tools might aid lesson planning and development. In each case, care needs to be taken to work through the outputs and ensure they are aligned with the content, pedagogy, and technology under consideration. While the tools produce plausible outputs in terms of how they might be read and interpreted, the challenges of aligning them with some design criteria are substantial due to weak, or non-existent, links to standards and the fallibilities of ChatGPT discussed earlier.

A maths problem

In the tables below, I list the titles for different pedagogically related AI queries I have experimented with in developing an understanding of how these tools might fit into my professional teaching practice.

An email generator

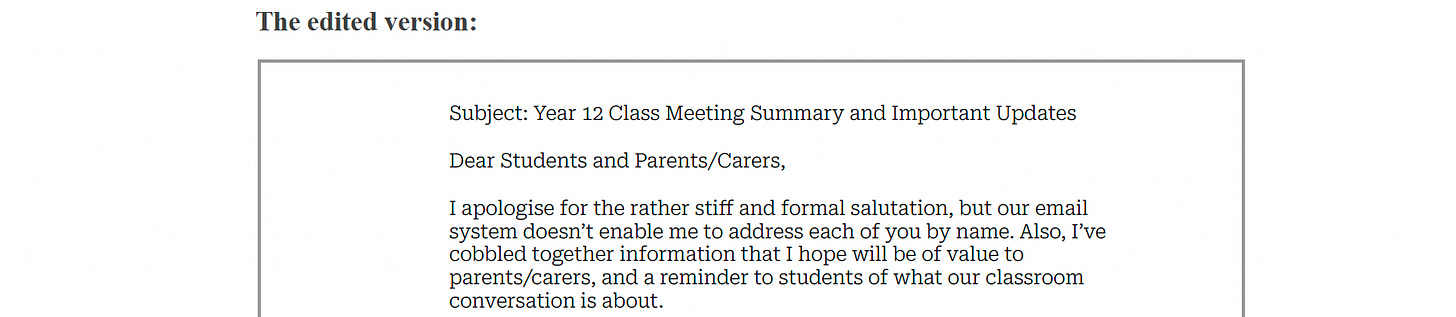

On March 1, 2024, I held a class meeting with a Year 12 General English class. The purpose of the meeting was to update the class on school practices and classroom expectations, covering phone policy, attendance, doors and classroom access, work and progress, and accountability. My intention was to email students and parents with a summary of the meeting at the end of the day.

My notes for the meeting ran to some 1100 words, and time was running out. I instructed AgentGPT to summarise the document and generate a suggested email to parents and students. "Can you please summarise the following document into a short email to be sent to both students and parents of a Year 12 class. The document will be attached to the email. Please use standard Australian spelling" (AgentGPT and Price 2024b).

The output failed to summarise the section entitled Accountability, and provided a summary repeat of points from earlier in the document. I re-queried the agent, asking it to provide a succinct summary of that last section, which it failed to do in any satisfactory manner. I then copied the Accountability section into the query, and asked for a brief summary of the text, in the style of its previous summary, but again it was an unsatisfactory response.

I copy/pasted the output into a document for editing, and proceeded to edit the document to replace flowery, and sometimes repetitive language, with choices more in keeping with my own style.

For an experienced writer such as me, I do think there is value in doing this because the structural elements of the communication are dealt with quickly, and matters of shaping and polishing the communication with my own style make fairly light work of the process. Rather than working through the main document and summarising it, I estimate that I was able to save a considerable amount of time and produce an email that, to all intents and purposes, sounds like it is from me. An inexperienced writer or editor might not have the same level of success.

From the point of view of working quickly, this served as an adequate step-off point. I doubt that I could have rendered a summary of the main document quite as readily. The Agent failed to render in Australian Standard English: emphasize, minimize are not Standard Australian English (Australian Government, n.d.), and much of the editing task was to reduce the flowery language and use phrases that are somewhat 'native' to my style.

The challenge of having work rendered in Standard Australian English is a concern if these tools are to be deployed for use by students. I am reminded here of Tom Waddell's advice to keep the task small, and consequently think I would have been better served asking for a succinct summary of the document in chunked sections.

While the edited and distributed version was in fact longer, it contained additional information that was not supplied to the agent, and a summary of the accountability section.

Microsoft (and non-Microsoft) Education Copilot

I was an early adopter to Education Copilot, which appears to be positioned to look similar and pass off as a Microsoft Copilot offering. I have subsequently discovered that "Education Copilot is a third-party website. It's not related to Microsoft" (Chahar 2024), and that Microsoft is rolling out an education version of Copilot with "several expansions for education audiences. Microsoft Copilot with commercial data protection will be available to all faculty and higher education students ages 18 and above. And, Copilot for Microsoft 365 eligibility will now include education faculty and staff" (Microsoft Education Team 2023). It is not clear what geography this announcement refers to, the service is not available as far as I can see in our school apps resources, nor in my personal MS365 account.

The promise of (non-Microsoft) Education Copilot is "to provide educators with high--quality, AI--generated templates and resources that streamline lesson planning and preparation. We aim to save educators time and effort so they can focus on what matters most: their students" (Education Copilot, n.d.).

It offers a unit planning facility in which resources are generated to aid in teaching, including a unit planner, lesson planner; educational handout, powerpoint, idea, and project generator; quiz builder, writing prompts, student reports, parent emails; and other generative tools.

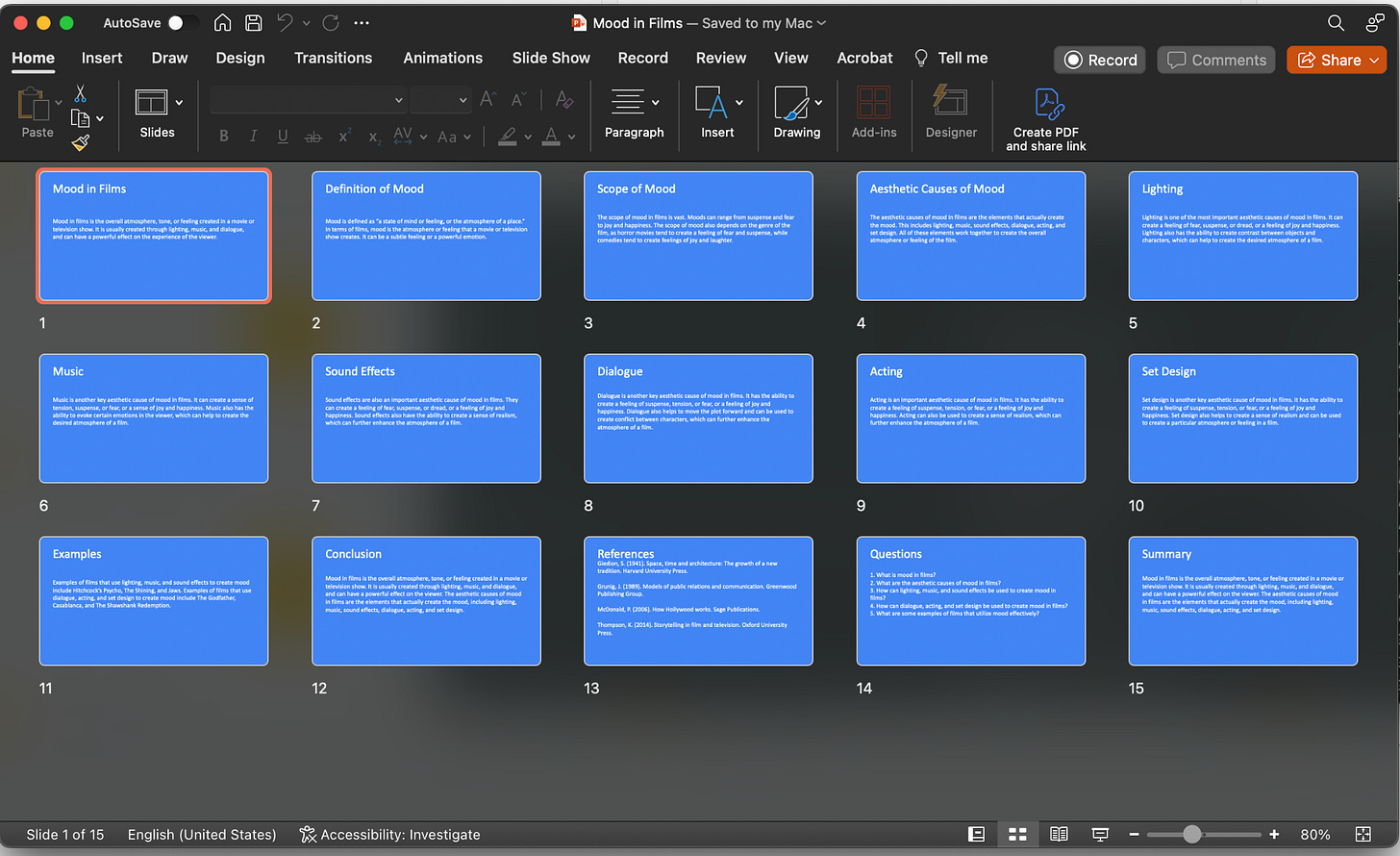

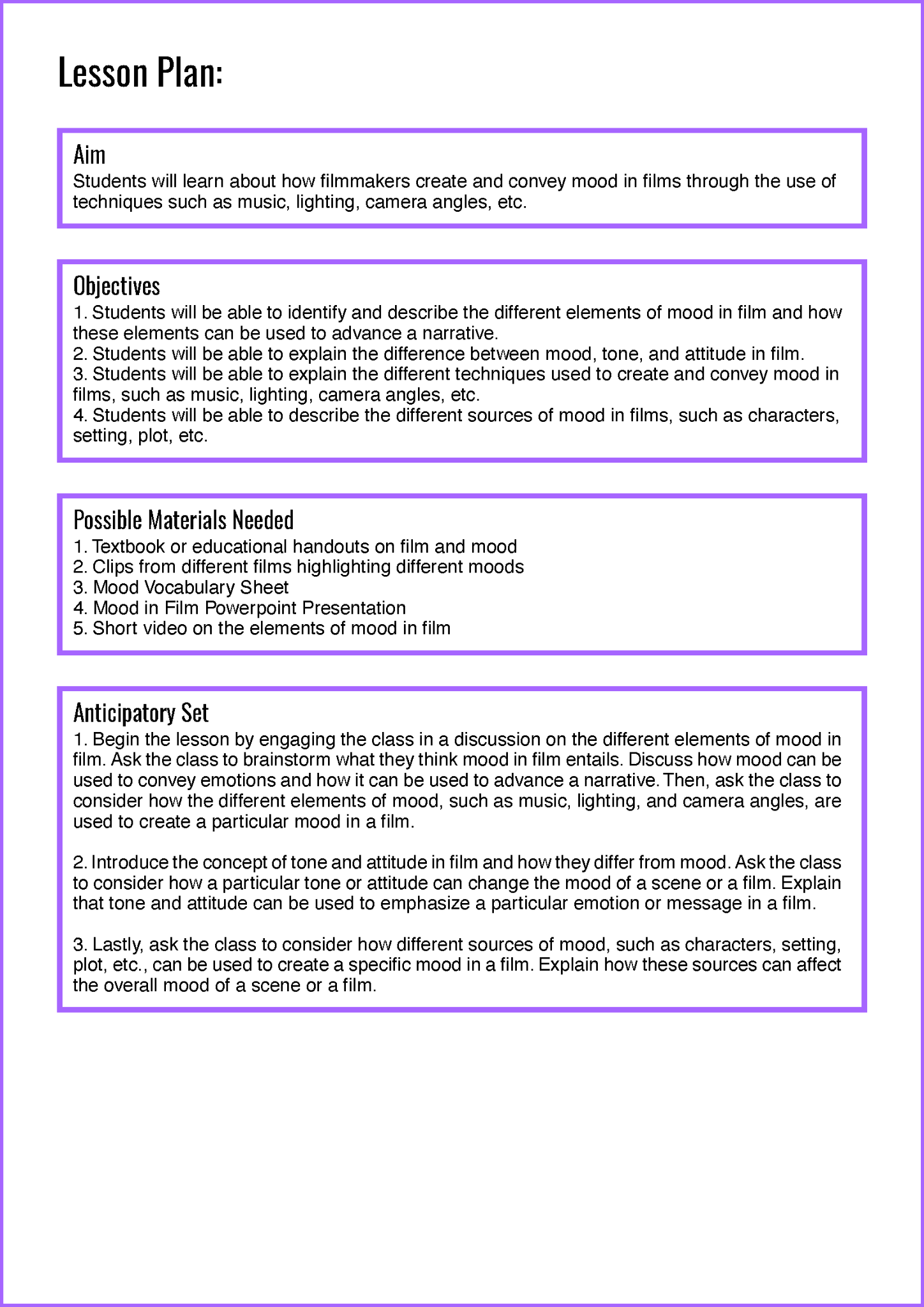

The following is a demonstration from 2023 using the Education Copilot Unit Planner to generate a set of resources for Analysing Mood in Film, part of a study in film for a Year 11 ATAR class. The process begins by entering a name for the unit, and the subject or course it is part of, followed by summary points of what the unit should cover, and the standards or objectives it should meet.

There is a helpful AI generator for these inputs which could be useful if some starting ideas are required. However, the importance of being precise in writing the prompt can't be overstated. The unit generator then proceeds to produce a sequence of lessons, and a set of resources that can be used in those lessons.

A two-day lesson sequence for teaching mood in film to Year 11 ATAR students.

The lesson plan opens, displaying a lesson overview, a set of student objectives and a set of essential questions. A series of YouTube videos is added to resources (in this case, five) and links to download a lesson plan, educational handout and context builder.

If a powerpoint was added to the unit generator, then a powerpoint of 15 slides will be generated and downloaded. This is a remarkably quick process, taking just a few minutes to complete. The lesson overview, student objectives and essential questions can be regenerated with a click of a button in the boxes.

Overall, Education Copilot is a well thought-out interactive AI for generating well resourced lesson plans quickly and with some potential for implementation. The generative process is flexible and can be manipulated to tweak resources for suitability to classes. However, It appears to be no longer functioning.

Teachflow

Teachflow is another promising AI tool built along similar lines to Education Copilot, but perhaps with a little more rigour. The promise of Teachflow is to "streamline your workflow so you can focus on inspiring the next generation. Create, manage, and share lesson plans with ease and integrate seamlessly with Google Classroom" (2023).

Teachflow has a substantial range of apps directed to classroom management, student engagement and collaboration, integrating technology, assessment and evaluation, classroom engagement and activities, lesson planning and resources, content creation, and communication.

The app is directed towards capturing learners, schools and families through the integration of Google classroom to use the platform as their learning management system. Generating resources and planning is done in a similar way to Education Copilot (above), with the use of a form wizard to guide scope of the planning.

Leading the planning is a 'standards' input, drawing from a list of educational standards that include the USA, UK, many European jurisdictions, and Canada. The Victorian Curriculum, NSW Standards Education Authority, and Australian Curriculum (ACARA) for some subjects, and up to some year levels, are listed. Some lack ID numbers, and are not activated to appear in the selection box.

Producing resources may at first seem time consuming because the wizard requires input into a number of boxes.

The following sequence explores some of the tools for developing a lesson plan and sequence for Year 12 General English in composing a narrative short story from visual prompts.

Learning Objective Generator

As a starting point, I set out to have the AI generate some learning objectives for the topic. Once the data is entered into the wizard (standards, subject, grade level, key topics) the wizard generates a set of learning outcomes. In this case, I set the wizard to meet SCSA standards, but it is impossible to tell whether it did so. The wizard does not reference the standards or curriculum points that would make the exercise rich, nor does it repeat the prompt information for quick reference to what was asked.

Although somewhat useful, there is a lack of structure to align learning outcomes to common intended learning outcomes approaches that invoke aspects of Blooms Taxonomy (Georgia Tech 2024) or the five-step approach described by the University of Tasmania (UTAS 2018).

The second step was to generate a lesson sequence. This produced a sequence of five lessons in which students go from demonstrating an understanding of visual prompts, through effectively using text structures, language features, and visual techniques; demonstrate understanding of the writing process by using strategies for planning, drafting, revising, editing, and proofreading; presenting their narrative to different contexts and purposes; to evaluating their own work and providing constructive feedback to their peers.

The sequence does not differ much from sequences I've seen presented by teachers in planning documents elsewhere. It certainly lacks any fresh ideas, has a focus on analytical and critical techniques, rather than engagement with art techniques or creative writing practices in which the practice of thinking and making that thinking comprehensible is the goal of the exercise.

The next step was to use the lesson plan creation tool, which produced a structure that set out the objectives, the essential questions to be explored, and the added input of the teaching method to be employed, in this case Laurillard's Conversational Framework (2012), along with materialsrequired in the textbook and visual prompts.

The lesson plan generated a timed framework of activities that meet some degree of each of Laurillard's six types (although not explicitly identified), with homework added on that reinforces practice. The lesson plan only extended across two days, whereas the sequence specified five days. The AI did not allocate correct time slots: day one comes in at 55 minutes, which is just about right, but day two sums to 80 minutes.

When the lessons were modelled in Laurillard's Learning Designer, I rescheduled some of the activities suggested by the AI, to improve balance across all six learning types. The textbook reference did not correctly identify the content (the pages nominated do not contain the content the AI specified), and the lesson objectives were concentrated around Blooms Applications, with one Evaluation, but nothing of a higher order that is expected of a creative writing topic.

###### Teachflow step 3: lesson plan creator.

Teachflow, used in conjunction with a planner such as Learning Designer (with the capability to export planning directly into moodle) has real potential to save time in terms of developing unit, topic, and lesson plans and resources.

The key to success will be that the data the AI relies on can be trusted, that it can draw from curriculum specifications reliably, and that it can analyse learning texts coherently for the benefit of teachers and students, and that it can identify aspirational intended learning outcomes to help direct the programming.

A final comment about 'the prompt'

At the core of this emerging technology is the dialogic nature of communicating with a machine via written prompts with the goal of developing a better understanding of user context. According to Robin Li, co-founder of Chinese AI giant Baidu, "in ten years, half of the world's jobs will involve prompt engineering, and those who cannot write prompts will become obsolete" (Cited in Korzynski et al. 2023).

Prompt engineering is a rapidly emerging career choice, with AI Tool Report reporting increasing job opportunities with increasing concomitant salary offerings. To help people train for the roles they have recently launched an online training program to help users develop their prompt skills, "ensuring [prompts] are precisely tailored for effective AI engagement and optimal results" (Crowley and Lawson 2024b).

As can be seen with results from different experiments I have conducted, along with the reports of experiments conducted by others, the quality of the prompt is critical to producing an output that meets the demands.

Korzynski et al. argue that prompt engineering is a human-centric discipline rather than one of information technology: "a human language‐focused practice in which individuals create prompts using unique and separate elements of text, known as distinct tokens" (2023, 26).

Their article set out to explore AI prompt engineering "as a digital competence using a narrative and critical literature review" . Understanding of the intricacies involved in AI prompt engineering requires examination of different "dimensions, techniques, challenges, and applications across multiple domains".

The main focus is on the development of digital competencies in education, ensuring both students and teachers develop the knowledge and skills to use technology, both legacy and emerging, effectively. Korzynski et al. cite fundamental literacy addressing both technology mastery and a digital citizenship mindset in education. The DigComp framework is one of the most comprehensive developments in this area.

The DigComp framework was created to provide an in‐depth and structured approach to understanding digital competence, created with the purpose of informing and guiding policies to develop digital competencies for all people (European Commission 2022). The framework identifies five key components of digital competencies, with 21 competencies.

The EU's latest publication "provides more than 250 new examples of knowledge, skills and attitudes that help citizens engage confidently, critically and safely with digital technologies, and new and emerging ones such as systems driven by artificial intelligence (AI)" (Vuorikari, Kluzer, and Punie 2022).

Korzynski et al. found that prompt engineering overlaps with 14 of the 21 competencies in the framework. One of the ways they notice that prompt engineering is being tackled, is the development of a 'metaprompt' approach designed to "leverage the language model's inherent capabilities and understanding of natural language to create more effective and nuanced prompts" (2023, 28).

This is the approach taken by AI Tool Report discussed above, essentially using the AI agent to refine and craft the prompts to yield better and more accurate results. The authors discuss applications in entrepreneurship, art, science, healthcare, and discuss details of the components of an effective prompt for text-in-image-out and text-in-text-out scenarios.

Because in text-in-text-out scenarios, the creation of a prompt is to meet a desire to perform a specific language task based on an architecture that varies according to the nature of the task, they developed an acronym of AIPROMPT to structure the engineering recommendations.

An effective prompt may include the following elements:

Context -- can include information about the role the model should play for the task or any necessary information about the situation that may ground it ('You are a salesperson in a technology company');

Instruction -- the task to be performed ('Write an email to a customer thanking for high attendance during the last workshop on MLOps');

Input Data -- data for few‐shot learning or information the model should use to perform the task ('Customer name: XYZ, keywords to include = [future, innovation, parthership]');

Expected output format -- information about the format and type of output in which the answer is to be provided ('Provide just the email message content') (Korzynski et al. 2023, 30).

As I have discovered in experimenting with AI tools, it is often required to combine multiple building blocks to receive the expected result, such as my earlier experiment with an email needing to extract the important information from the meeting notes and then generate an email based on that information. There is the possibility that some of the shortfalls I experienced are related to the nature of using the free versions of the tools and not the paid ones. Given the hefty price tag attached to some of them, it would be reasonable to expect a higher performance.

If we are to teach students skills in using AI tools, the starting point needs to be with prompt engineering and the associated digital literacies. As Korzynski et al. note: "For educators, our findings may serve as a valuable reference for teaching AI students about the role of prompt engineering in optimizing the performance of language models. The strategies and examples we presented could be integrated into curricula to further enrich the learning experience" (2023, 33).

Conclusion

The widespread adoption of AI tools, notably ChatGPT, across diverse sectors, including education offers potential advantages in reducing teacher workloads and enhancing student learning outcomes, however, there are concerns regarding privacy, bias, and maintaining originality.

A Parliamentary inquiry into the use of generative AI within education confirms the need for robust guidance around ethics of use and satisfactory and equitable outcomes for students. The benefits and risks associated with GAI in education can be found in the potential for personalised learning experiences, student support, and access to learning by disadvantaged students. However, there are concerns surrounding privacy issues, bias, and erroneous outcomes.

There is a clear need for educator training and the establishment of policies around academic integrity, revising the definition of what constitutes originality, and making sure that student learning is not disadvantaged by regimes of punishment that impact on fair and transparent GAI uses across all levels of education.

There is also a need to address concerns related to privacy, bias, and originality within the realm of GAI technologies, and education is particularly vulnerable. We need robust frameworks and guidelines that foster critical evaluation of GAI tools like ChatGPT if we are to foster meaningful educational outcomes, and stimulate independent thinking among students and teachers, and adequately prepare future students for future work.

Meticulous integration and instruction design is critical to maintain a proper balance between machine and human if we are to effectively explore the potential of GAI tools in enriching learning experiences, particularly in writing, editing, and creativity domains. Continued research is needed to dissect and understand the challenges and constraints presented by the rapid deployment of GAI throughout the education systems.

The potential of generative AI tools in easing teacher workloads is shown through a range of experiments that explore the deployment of GAI tools in educational tasks, while working to align outputs with content, pedagogy, and technological constraints. An experiment with a Year 12 cohort's class meeting, and the subsequent composition of an email to parents and students, points to potential upsides in communications. Although care must be taken to enforce human intervention in editing and rewriting to maintain adherence to acceptable standards.

The comparison of two tools aimed at aiding educators in lesson planning and resource creation, shows the benefits of an ease of use with modern user interfaces, and the challenges that weak data poses. Teachflow shows promise in streamlining lesson planning, especially when coupled with other planning tools, but with a need for reliable and contextual data input.

A final use of AI

Based on the discussion above, A final comment about 'the prompt', I tasked gentGPT with the following:

You are a research assistant. Please provide a summary of fewer than 200 words to suit an academic article of the information that follows. Your output should adhere to Australian Standard English spelling and punctuation, and you should try to model the language on that of the original. The information to summarise... [that section pp 39-42].

The following is the verbatim summary output from the agent, following engagement to reduce the length of the first attempt by about 25 words and adjust the use of emphasize, both because of its inaccuracy (it is a favourite of ChatGPT it seems), and non-SAE spelling. The first attempt was too short and abrupt, so a second attempt was made. Although it lacks nuance, it isn't a bad effort.

Prompt engineering is an emerging technology that involves communicating with a machine through written prompts to better understand user context. Robin Li, co-founder of Baidu, predicts that prompt engineering will be a crucial skill in the future job market. An online training program has been launched by AI Tool Report to help people develop their prompt skills.

Korzynski et al. argue that prompt engineering is a human-centric discipline and explore its dimensions, techniques, challenges, and applications. The DigComp framework provides guidance for developing digital competencies, including prompt engineering. The model from Korzynski et al. overlaps with 14 of the 21 competencies in the framework. The 'metaprompt' approach leverages language model capabilities for more effective prompts. AIPROMPT is an acronym used to structure engineering recommendations.

Effective prompts include context, instruction, input data, and output format. Teaching prompt engineering and digital literacies is vital for students to gain AI tool skills. Educators can integrate prompt engineering strategies and examples into their curricula to enhance learning.

Works cited.

AATA. 2023. "A Submission in Response to the House Standing Committee on Employment, Education and Training Inquiry into AI in Education."

AgentGPT, and Kevin Price. 2024a. "AgentGPT: SCSA and ACARA Specification Access." February 24, 2024. https://agentgpt.reworkd.ai/.

---------. 2024b. "AgentGPT:Email to Parents." March 1, 2024. https://agentgpt.reworkd.ai/.

AI4K12. 2021. "Grade Band Progression Charts." AI4K12. June 29, 2021. https://ai4k12.org/gradeband-progression-charts/.

---------. n.d. "AI4K12." AI4K12. Accessed February 25, 2024. https://ai4k12.org/.

Australian Government. n.d. "Dictionaries: An Indispensable Guide for Writing and Style | Style Manual." Accessed March 2, 2024. https://www.stylemanual.gov.au/blog/dictionaries-indispensable-guide-writing-and-style.

Bacchi, Carol. 2009. *Bacchi: Analysing Policy*. AU: Pearson Higher Education. https://scholar.google.com/scholar_lookup?hl=en&publication_year=2009&author=C.+Bacchi&title=Analysing+Policy%3A+What%E2%80%99s+the+Problem+Represented+to+Be%3F.

Bala, Kavita, and Alex Colvin. 2023. "CU Committee Report: Generative Artificial Intelligence for Education and Pedagogy | Center for Teaching Innovation." July 18, 2023. https://teaching.cornell.edu/generative-artificial-intelligence/cu-committee-report-generative-artificial-intelligence-education#Section1.

Bedington, Andelyn, Emma F. Halcomb, Heidi A. McKee, Thomas Sargent, and Adler Smith. 2024. "Writing with Generative AI and Human-Machine Teaming: Insights and Recommendations from Faculty and Students." *Computers and Composition* 71 (March): 102833. https://doi.org/10.1016/j.compcom.2024.102833.

Board, The Conference. 2023. "Survey: Majority of US Workers Are Already Using Generative AI Tools--But Company Policies Trail Behind." September 13, 2023. https://www.prnewswire.com/news-releases/survey-majority-of-us-workers-are-already-using-generative-ai-toolsbut-company-policies-trail-behind-301925743.html.

Briggs, Joseph, Devesh Kodnani, Jan Hatzius, and Giovanni Pierdomenico. 2023. "The Potentially Large Effects of Artificial Intelligence on Economic Growth." Global Economcs Analysis. USA: Goldman Sachs. https://www.gspublishing.com/content/research/en/reports/2023/03/27/d64e052b-0f6e-45d7-967b-d7be35fabd16.html.

Cain, Sian. 2023. "'This Song Sucks': Nick Cave Responds to ChatGPT Song Written in Style of Nick Cave." *The Guardian*, January 17, 2023, sec. Music. https://www.theguardian.com/music/2023/jan/17/this-song-sucks-nick-cave-responds-to-chatgpt-song-written-in-style-of-nick-cave.

Chahar. 2024. "Education Copilot Contact?" February 15, 2024. https://answers.microsoft.com/en-us/bing/forum/all/education-copilot-contact/6032e889-9a97-46c1-9905-9189b7051811.

ChatGPT. 2024. "Education Specifications Query." February 24, 2024. https://chat.openai.com.

ChatGPT, and Kevin Price. 2024. "ChatGPT Maths Problem Generation." February 12, 2024. https://chat.openai.com.

Commonwealth Parliament, Canberra. 2023. "Inquiry into the Use of Generative Artificial Intelligence in the Australian Education System." Text. Australia. May 24, 2023. https://www.aph.gov.au/Parliamentary_Business/Committees/House/Employment_Education_and_Training/AIineducation.

Crowley, Martin, and Liam Lawson. 2024a. "OpenAI Partners with Common Sense." AI Tool Report. January 30, 2024. https://aitoolreport.beehiiv.com/p/openai-partners-with-common-sense.

---------. 2024b. "Softbank to Rival NVIDIA with $100B AI Project - Kevin@logorythm.Com.Au - Logorythm.Com.Au Mail," February 19, 2024. https://mail.google.com/mail/u/0/#label/AI+Tool+Report/FMfcgzGxRnbMjLddvWmQtfqXpzvvqmJB.

---------. 2024c. "New Memory Feature for ChatGPT." AI Tool Report. February 22, 2024. https://aitoolreport.beehiiv.com/p/new-memory-feature-for-chatgpt.

Curtin University. 2023. "Curtin University Reponses for The House Standing Committee on Employment, Education and Training Report on the Use of Generative Artificial Intelligence in the Australian Education Systm."

Day, Katherine, Renée Otmar, Rose Michael, and Sharon Mullins. 2024. "Can ChatGPT Edit Fiction? 4 Professional Editors Asked AI to Do Their Job -- and It Ruined Their Short Story." The Conversation. February 12, 2024. http://theconversation.com/can-chatgpt-edit-fiction-4-professional-editors-asked-ai-to-do-their-job-and-it-ruined-their-short-story-216631.

Department of Education, Canberra. 2024. "Australian Framework for Generative Artificial Intelligence (AI) in Schools." Text. education.gov.au. scheme=AGLSTERMS.AglsAgent; corporateName=Department of Education; address=50 Marcus Clarke St, Canberra City, ACT 2601; contact=+61 1300 566 046. January 31, 2024. https://www.education.gov.au/schooling/resources/australian-framework-generative-artificial-intelligence-ai-schools.

Dougiamas, Martin. 2023. "Submission to Standing Committee on Genertive AI from Martin Dougiamias Moodle."

Druga, Stefania, Nancy Otero, and Amy J. Ko. 2022. "The Landscape of Teaching Resources for AI Education." In *Proceedings of the 27th ACM Conference on on Innovation and Technology in Computer Science Education Vol. 1*, 96--102. ITiCSE '22. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/3502718.3524782.

Dwivedi, Yogesh K., Nir Kshetri, Laurie Hughes, Emma Louise Slade, Anand Jeyaraj, Arpan Kumar Kar, Abdullah M. Baabdullah, et al. 2023. "Opinion Paper: 'So What If ChatGPT Wrote It?' Multidisciplinary Perspectives on Opportunities, Challenges and Implications of Generative Conversational AI for Research, Practice and Policy." *International Journal of Information Management* 71 (August): 102642. https://doi.org/10.1016/j.ijinfomgt.2023.102642.

Education Copilot. n.d. "AI Lesson Planner." Education Copilot. Accessed March 2, 2024. https://educationcopilot.com/.

European Commission. 2022. "DigComp Framework - European Commission." 2022. https://joint-research-centre.ec.europa.eu/digcomp/digcomp-framework_en.

Georgia Tech. 2024. "Developing Student Learning Outcome Statements | Office of Academic Effectiveness." 2024. https://academiceffectiveness.gatech.edu/assessment-toolkit/developing-student-learning-outcome-statements.

Giannini, Stefania. 2023. "Generative AI and the Future of Education - UNESCO Digital Library." July 2023. https://unesdoc.unesco.org/ark:/48223/pf0000385877.

Gordon, Cindy. 2023. "ChatGPT Is The Fastest Growing App In The History Of Web Applications." Forbes. February 2, 2023. https://www.forbes.com/sites/cindygordon/2023/02/02/chatgpt-is-the-fastest-growing-ap-in-the-history-of-web-applications/.

Habib, Sabrina, Thomas Vogel, Xiao Anli, and Evelyn Thorne. 2024. "How Does Generative Artificial Intelligence Impact Student Creativity?" *Journal of Creativity* 34 (1): 100072. https://doi.org/10.1016/j.yjoc.2023.100072.

Hansard. 2024. "Inquiry into the Use of Generative Artificial Intelligence in the Australian Education System." Australia. https://parlinfo.aph.gov.au/parlInfo/search/display/display.w3p;query=Id%3A%22committees%2Fcommrep%2F27676%2F0000%22.

Karvellas. 2024. "'Risks Have Been Identified': Govt Announces AI Expert Group." ABC Listen. February 14, 2024. https://www.abc.net.au/listen/programs/radionational-breakfast/-risks-have-been-identified-govt-announces-ai-expert-group-/103468914.

Kennedy, Gregor. 2024. "Submission to the House Standing Committee Inquiry into the Use of Generative AI in the Education System." Univeristy of Melbourne.

Koehler, Matthew, and Punya Mishra. 2009. "What Is Technological Pedagogical Content Knowledge (TPACK)?" *Contemporary Issues in Technology and Teacher Education* 9 (1): 60--70. https://www.learntechlib.org/primary/p/29544/.

Korzynski, Pawel, Grzegorz Mazurek, Pamela Krzypkowska, and Kurasinski. 2023. "Artificial Intelligence Prompt Engineering as a New Digital Competence: Analysis of Generative AI Technologies Such as ChatGPT." *Entrepreneurial Business and Economics Review* 11 (3): 25--38. https://www.ceeol.com/search/article-detail?id=1205908.

Laurillard, Diana, 1948. 2012. *Teaching as a Design Science: Building Pedagogical Patterns for Learning and Technology*. *Routledge, Taylor & Francis Group*. Book, Whole. New York, NY: Routledge. https://doi.org/10.4324/9780203125083.

Luo, (Jess) Jiahui. 2024. "A Critical Review of GenAI Policies in Higher Education Assessment: A Call to Reconsider the 'Originality' of Students' Work." *Assessment & Evaluation in Higher Education* 0 (0): 1--14. https://doi.org/10.1080/02602938.2024.2309963.

Makin. 2023. "Will AI Replace Graphic Designers?" April 14, 2023. https://www.linkedin.com/pulse/ai-replace-graphic-designers-sean-makin.

Markauskaite, Lina, Rebecca Marrone, Oleksandra Poquet, Simon Knight, Roberto Martinez-Maldonado, Sarah Howard, Jo Tondeur, et al. 2022. "Rethinking the Entwinement between Artificial Intelligence and Human Learning: What Capabilities Do Learners Need for a World with AI?" *Computers and Education: Artificial Intelligence* 3 (January): 100056. https://doi.org/10.1016/j.caeai.2022.100056.

Masood, Raisa. 2023. "Guide to ChatGPT for Parents and Caregivers | Common Sense Media." August 30, 2023. https://www.commonsensemedia.org/articles/guide-to-chatgpt-for-parents-and-caregivers.

Microsoft Education Team. 2023. "Expanding Microsoft Copilot Access in Education." Microsoft Education Blog. December 14, 2023. https://educationblog.microsoft.com/en-us/2023/12/expanding-microsoft-copilot-access-in-education.

Morgan Stanley. 2023. "Generative AI Is Set to Shake Up Education." Morgan Stanley. December 22, 2023. https://www.morganstanley.com/ideas/generative-ai-education-outlook.

Open AI. 2024. "What Is ChatGPT? | OpenAI Help Center." February 2024. https://help.openai.com/en/articles/6783457-what-is-chatgpt.

Purtill. 2024. "AI Killed Leanne's Copywriting Business. Now She Earns a Living Teaching How to Use ChatGPT." *ABC News*, February 14, 2024. https://www.abc.net.au/news/science/2024-02-15/freelance-copywriters-artificial-intelligence-ai-automate-work/103413972.

Roberts, Nicholas, Jason Bennett Thatcher, and Richard Klein. 2006. "Mindfulness in the Domain of Information Systems." *DIGIT 2006 Proceedings*, January. https://aisel.aisnet.org/digit2006/2.

SA Dept. of Education. 2024. "Submission to the House Standing Committee Inquiry into the Use of Generative AI in the Education System." Department for Education South Australia.

Selber, Stuart A. 2004. *Multiliteracies for a Digital Age*. SIU Press.

Teachflow. 2023. "Home - Teachflow.AI." May 11, 2023. https://teachflow.ai/.

Tong, Anna. 2024. "OpenAI Launches GPT Store to Capitalize on ChatGPT's Consumer Success | Reuters." January 11, 2024. https://www.reuters.com/technology/openai-launches-gpt-store-capitalize-chatgpts-consumer-success-2024-01-10/.

UTAS. 2018. "How to Write ILOs - Teaching & Learning." Asset Listing. Teaching & Learning - University of Tasmania, Australia. 2018. https://www.teaching-learning.utas.edu.au/ilo/writing.

Vuorikari, Riina, Stefano Kluzer, and Yves Punie. 2022. "DigComp 2.2: The Digital Competence Framework for Citizens - With New Examples of Knowledge, Skills and Attitudes." JRC Publications Repository. March 17, 2022. https://doi.org/10.2760/115376.

Waddell. 2024. "The Right Tool for the Right Job: Understanding Where AI Helps (and Where It Can't)." PerfectIt. February 27, 2024. https://www.perfectit.com/blog/the-right-tool-for-the-right-job-understanding-where-ai-helps-and-where-it-cant.

Wiggers, Kyle. 2024. "OpenAI Partners with Common Sense Media to Collaborate on AI Guidelines." *TechCrunch* (blog). January 29, 2024. https://techcrunch.com/2024/01/29/openai-partners-with-common-sense-media-to-collaborate-on-ai-guidelines/.